Gigabyte Radeon RX 6600 XT EAGLE 8G review

With the 6600 XT, AMD is doing more with less.

I picked this up for $380 at Microcenter on launch day, right at AMD’s MSRP, and they appeared well-stocked. Availability online doesn’t look so rosy, and of course there’s no telling how things will look in brick-and-mortar stores in a couple more weeks, but that’s still quite a trick.

The variant of the 6600 XT I’ve got is Gigabyte’s lower-end one. Purportedly there won’t be so many of these around, with more volume a notch up the stack (in Gigabyte’s case, that means the GAMING OC). All else equal I’d still rather test the cheap stuff; if it’s good, everything else probably will be too, and if it isn’t, we learn something about what the manufacturer considers alright to ship.

Overclocking and undervolting will be in another article.

Specifications and performance expectations

The 6600 XT is the most interestingly-proportioned card released for this segment in a very long time. It has 32 compute units and 64 ROPs clocked at 2589 MHz (boost) and a 128-bit path to 8 GB of GDDR6 at 16 Gbps. This works out to 10.6 single-precision TFLOPS and 256 GB/s of memory bandwidth, which is about half of a 6800 XT on both sides, but the 6600 XT only has 32 MB of L3 cache compared to the 6800 XT’s 128 MB.

Cache doesn’t scale like other aspects of a card. If we cut everything about a card including cache in half and try to use the same resolution, it’ll feel like we cut VRAM bandwidth by more than we actually did, since a lot of needed data that used to be cached won’t be anymore. Reducing resolution largely gets around this.

Judging by spec sheets and typical game rendering methods alone (which may miss some very important nuance), the 6600 XT’s 32 MB of cache looks comfortable for 1080p, useful but a bit cramped for 1440p, and very weak for 4K. Otherwise this GPU should be very competent at 1440p, but it may feel bandwidth-starved in some games.

AMD intends for the 6600 XT to be used at 1080p, and that’s understandable. The Steam Hardware Survey still shows 1920x1080 monitors with 67.2% market share as of this writing, as opposed to 8.5% for 2560x1440 and 2.3% for 3840x2160. More people buy very powerful graphics cards for 1080p monitors than buy merely adequate graphics cards for higher resolution monitors. I’ve got a rant or three on this, but the market reality that AMD is working with is undeniable.

One other factor may make this card less consistent: it only has 8 PCIe lanes instead of the usual 16. If your CPU and motherboard support PCIe 4.0, that gives it the same bandwidth as the PCIe 3.0 x16 that has been standard (and game developers have been targeting) for a long time, but if it’s only running PCIe 3.0 x8 (as it is in my testing) that may be a noticeable penalty.

With a 237 mm2 die and 8 GB of VRAM on a 128-bit interface, AMD should have as good a shot as anyone’s had this year at turning out enough GPUs to meet demand, though of course they probably still won’t succeed at that. It looks distinctly cheaper to produce than the RTX 3060 (much less the 3060 Ti) even assuming that Samsung’s fabrication for Nvidia is much cheaper per wafer.

Physical design and cooling

Gigabyte’s physical design for this card is one I haven’t seen before, with a plastic backplate integrated with the shroud. The integration with the shroud gives it a very solid feel despite keeping metal parts to a minimum. It doesn’t look or feel like the classiest card out there, but it doesn’t give a bad impression either, especially given it’s among the cheapest 6600 XTs.

The major heat-generating components all have to be on the front of the board to make this construction work thermally. The design might not scale well to more powerful cards where board space is at more of a premium.

The card has one important physical issue: all three of the fans rattle at first. It’s getting better quickly as the fans accumulate their first hours, but initially it’s unsubtle and a bit disturbing, and it doesn’t inspire confidence in their long-term reliability.

The card’s cooling system works well. At default settings, it stays around or below 70°C base and 85-90°C hotspot in a 24°C ambient environment while making a moderate amount of noise. The noise has a fairly smooth and unintrusive character aside from the early rattle. Coil whine exists but isn’t excessive. The fans stop at idle, leaving it around 47°C in a 24°C ambient environment.

A significant amount of airflow from the fans bypasses the heatsink to the top and bottom. I was confused by this design at first, but it has a big advantage: it isn’t going to toast an SSD or chipset underneath it, unlike many other cards.

Testing methods

For testing I used a Ryzen 7 5800X, a Gigabyte B450 I AORUS PRO WIFI, and dual-rank DDR4-3200. The older motherboard limits it to PCIe 3.0 and no resizable BAR. Radeon Anti-Lag is always enabled unless otherwise noted, because it makes many subtle problems much more obvious. More details are in the accordion below.

All Components

CPU: AMD Ryzen 7 5800XRAM: G.Skill F4-3600C16D-32GVKC --- 2x16GB, dual-rank Hynix CJR, tuning details here

GPU: Gigabyte Radeon RX 6600 XT EAGLE 8G

drive: Western Digital Blue SN550 1TB

motherboard: Gigabyte B450 I AORUS PRO WIFI

CPU cooling: Noctua NH-D9L

PSU: SeaSonic FOCUS SGX 500W

case: HighSpeed PC Half-Deck Tech Station

monitor: Nixeus NX-EDG27

audio interface: Behringer UM2

keyboard: WASD V2

mouse: Glorious Model O-

OS: Microsoft Windows 10 20H2

graphics driver: AMD Radeon Software 21.8.1

Performance

We’ll do this a bit differently since I don’t own anything except an RX 570 to compare against; I’m not currently well equipped to answer the question of how it stacks up against the RTX 3060 and 3060 Ti. Fortunately there’s no shortage of good reviews out there for the relative differences between cards. TechPowerUp has particularly comprehensive relative data if you don’t have one in mind.

The question I can work with is whether it’s enough of a graphics card in absolute terms to do what you want. To that end, we’ll look at a technologically diverse array of games, take a bit longer to find heavy scenes in those games, and touch on how performance is affected by graphics settings. More precise numbers are what’s missing, because the exact choice of scenes puts fairly wide error bars on it anyway, so there’s not much point when not trying to tease out small differences between cards. (Also I’m far enough behind schedule as it is.)

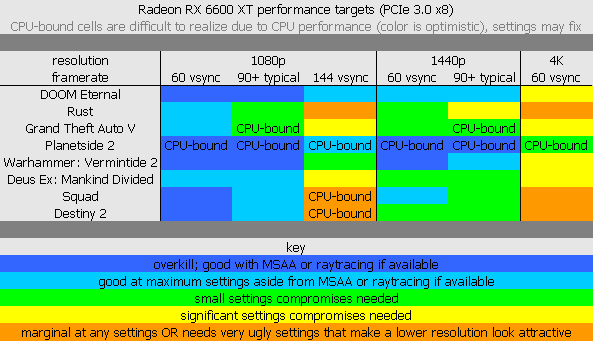

Some themes show up quickly in the results. At 1440p, 60 Hz vsync or 85+ typical FPS are usually not much trouble, but often need some small settings compromises. Figuring out which settings are most relevant is likely more trouble than the visual impact. 1080p gets it feeling very comfortably overkill for that kind of framerate target, but isn’t quite as good as expected at higher framerate targets. 144 Hz vsync still feels like a reach more often than not, for instance. PCIe 3.0 x8 with this motherboard might be holding it back in that area. 4K60 is as expected not a very good bet.

Post-processing and extra effects tend to be the settings with the most performance impact, while anything controlling geometric detail or shadow resolution tends to be unusually cheap to max out. MSAA is very heavy; if you’ve got to have it, this may not be the card for you.

This card typically runs at 2.45 to 2.6 GHz in GPU-bound gaming.

DOOM Eternal

1080p at maximum non-raytracing setttings gets 150-200 FPS, 1440p gets 100-140 FPS, and 4K struggles to keep over 60 FPS. Raytracing at 1080p is barely slower than 1440p without it, but the texture pool size has to be turned down a notch or two to fit it in VRAM. Apply the smaller texture pool size before turning on raytracing (not at the same time) or it will act out of VRAM despite the right settings.

Settings collectively have surprisingly little performance impact. You may as well run it on ultra nightmare, and if resolution and raytracing aren’t enough control, you’ll be hard-pressed to squeeze much extra performance out of it.

Rust

Rust at maximum settings is the heaviest non-MSAA game here, struggling badly even to maintain 1440p60. Depth of field, contact shadows, and grass shadows (the latter two of which are appropriately in the experimental section) don’t seem worth it for this card unless you’ve only got a 1080p60 monitor. Without them, 1440p keeps a usually-comfortable margin over 60 and 1080p spends more time in three figures than not. 4K60 is mostly hopeless regardless.

At reduced settings the game can run a whole lot faster than this, but it gets very ugly very quickly. A lot of settings are less harsh on the eyes and would be useful elsewhere but just don’t help performance much on this card.

Grand Theft Auto V

I tested this with three settings below maximum: MSAA was off, grass was very high (instead of ultra), and extended shadows distance was minimum. It’s easy to guess that this card and MSAA won’t be the best of friends and GTA V’s MSAA delivers on that. Grass is the main place the benchmark is optimistic, and turning it down a notch brings performance elsewhere in the game back into line with the benchmark. Extended shadows distance is buggy in the menu and not possible to increase.

The in-game benchmark ranges from about 60 to 140 FPS at 1080p, 60 to 105 FPS at 1440p, 50 to 85 FPS at 1440p with 2x MSAA, and 35 to 60 FPS at 4K. The 1080p and 1440p minimums would be higher if it weren’t getting CPU-bound at times.

Planetside 2

At 1440p maximum settings, smoke-filled scenes quickly bog it to around 90 FPS. Absent smoke or if particles are turned down to high, it has an easy enough time holding 110+ at maximum settings or 130+ with shadows and motion blur turned off. Either way, it’s regularly CPU-bound a bit lower than this in bigger fights despite the powerful CPU and dual-rank RAM. (Turning off shadows is the usual way to play since shadows are very CPU-heavy as well.)

Players aiming for 200+ FPS in lighter scenarios will want to either drop settings much more sharply or drop to 1080p, but there’s no need for both and no need for more careful tuning (so long as the CPU side can handle it, which is far from a given). 4K60 vsync at maximum settings is marginal, and it will take a couple of settings reductions to make it solid. No motion blur, high particles, and medium shadows should do the trick. Again, it takes an excellent CPU to maintain 60 fps vsync in all scenarios if shadows are enabled.

Warhammer: Vermintide 2

I used DirectX 11, because 12 is a lot slower here. This was the same with my RX 570.

1080p max settings bottoms out around 130 FPS, 1440p max settings 90 FPS, and 4K max settings 40 FPS. This is the sort of sharp cliff that looks like the 32MB cache isn’t holding up well enough at 4K.

Post-processing is very expensive here, and with less post-processing 4K60 (or 1440p120) vsync is entirely achievable. 1440p144 vsync is probably still marginal even with settings reductions. Other settings don’t help much.

Deus Ex: Mankind Divided

While this isn’t something many people are likely playing these days, it’s interesting because it has some tells of a game this card may not like, especially with MSAA enabled. Part of that proved correct at least: even 2x MSAA really only makes sense if you have a 1080p60 monitor.

Using DirectX 12 (which runs faster in this case) at maximum settings except for no MSAA, it struggles for 1440p60, but turning down contact hardening shadows and depth of field a notch each gives it plenty of margin. 1080p at these settings stays easily in three figures as usual, and 4K is mostly hopeless at higher settings as usual.

The game’s settings work well, and you can get much higher framerates or make 4K60 workable with them without much trouble.

Squad

This one is a bit fuzzy, because the nature of the game is that it’s tough to run through a lot of scenarios quickly to find the heaviest ones, and I haven’t played enough to be able to go right to them, but I know they exist. Looking around Jensen’s Range (which is definitely not as heavy as it gets) at maximum settings, the slowest scene I can find without resorting to excess particles goes 127 FPS at 1080p, 82 FPS at 1440p, and 41 FPS at 4K. The graphics settings are effective, and give a lot of flexibility without ever getting too ugly.

Particles are usually fine but can get messy in certain (not very realistic) cases like sustained machine gun fire kicking up dirt at close range. If this is an issue, resolution is the only thing that helps it much. This is the reason I marked 1080p144 and 4K60 marginal on the chart.

Destiny 2

This one’s again a bit fuzzy, for the same reasons as Squad. The heaviest scenes close at hand (which again are not the heaviest in the game) with depth of field one tick down from maximum go about 110 FPS CPU-bound at 1080p, 80 FPS at 1440p, and 45 FPS at 4K.

Ambient occlusion and depth of field are the two heaviest settings on this card by far, heavier than all other settings combined. Dropping depth of field off of maximum goes a long way all on its own. Minimum settings don’t impress with their speed, but they’re not too harsh on the eyes either.

Power use and efficiency

Total system power use at the wall (through a SeaSonic FOCUS SGX 500W PSU) is about 30W at idle and 250 to 300W under GPU-bound gaming loads. The card itself reports 3W at idle and 130W under full load (compare that against the card’s 160W rated TBP, which probably includes things the reporting doesn’t).

This card is very efficient at partial load, dropping to lower areas of its frequency/voltage curve as soon as the workload isn’t GPU-bound. This is an inherent tradeoff against CPU-bound input-to-display latency, but there are usually only a couple of milliseconds to be had here against very large differences in power consumption.

Bugs

Sometimes games stutter when FreeSync is enabled. Unlike a similar issue with Polaris cards, the missing frames are properly accounted for on framerate counters, and overlays don’t seem to affect it. It also did something entirely unique for one game on one evening only: while FreeSync was enabled, the game was capped at 67 FPS in a microstuttering pattern. This stutter usually isn’t present, and it hasn’t been repeatable enough to research much further. I’ve been running tests with FreeSync off for now in case it matters, but have been otherwise gaming with FreeSync on and it’s rarely noticeable.

Disabling FreeSync while connected to a 144 Hz monitor makes the VRAM unable to idle. This draws a bit under 20W extra at idle both at the wall and according to the card’s reporting, and I suspect that a similar issue has been wearing out my VRAM prematurely on multiple previous graphics cards (but on this one it’s much tougher to run into). Dropping to 120 Hz fixes it, but of course that’s not a great solution. The variant of the problem seen on this card shouldn’t be an issue for most people, but if you need to disable FreeSync to bypass the stutter bug or have a 144+ Hz monitor that doesn’t support FreeSync then it’s a serious problem.

Gigabyte contributes one physical issue: the fans rattle at first. It’s getting better quickly as the fans accumulate their first hours, but initially it’s unsubtle and a bit disturbing, and it doesn’t inspire confidence in their long-term reliability.

Pricing and competition

The most important thing for a GPU design in 2021 is to make efficient use of limited wafer and VRAM supplies. That’s the only thing that gets more and better cards into the hands of gamers yet this year. At that, AMD’s work here is masterful. The 6600 XT does more with less, and it’s a joy to see that in action.

Marketing is where it gets rough, not through any fault of AMD’s marketing team, but just because it’s an awkward product to market. It’s only right on the threshold of being easy to recommend for 1440p, and as is if it’s recommended too strongly for 1440p then people are going to assume that means 1440p at unconditional maximum settings, not think about it too hard, and have a bad time. This effect is exaggerated by the non-standard mix of resources, since there’s a bigger gap between the typical and worst-case performance.

Add in the current pricing situation and it gets even worse. While GPU MSRPs bear little to no resemblance to reality right now, AMD still has to set an MSRP, and they have no good options. The one they chose is so far only slightly disconnected from reality instead of entirely disconnected, which I appreciate. Unfortunately, when people inevitably make MSRP-to-MSRP comparisons it makes the 6600 XT look like a bad deal compared to Nvidia’s RTX 3060 Ti, which convincingly crosses that threshold of being easy to recommend for 1440p.

Luckily for the 6600 XT, if you actually go to buy a graphics card right now, you’ll find that it delivers the most performance per dollar by a solid margin. Online retailers don’t have much stock, but even on eBay it’s a touch cheaper than the (slower) 3060 non-Ti, and in brick-and-mortar stores it’s both available and priced in a class of its own.

Conclusions

As long as the status quo holds, the 6600 XT is the uncontested value king. While there’s room to argue how much of the GPU market that applies to, in its own segment it isn’t even close. Pricing and availability over the next couple of months are of course an open question, but there’s some reason for optimism.

That doesn’t say anything about its value in comparison to last year’s or next year’s pricing. If you can wait, you probably should.

While its average performance is solid, its lack of VRAM bandwidth sometimes shows through. If you’re a big fan of MSAA or raytracing you’ll likely be disappointed at how it handles them. On the other hand, if you like to keep only a lighter touch of post-processing like I often do, it flies.

For Gigabyte’s part, while the card is otherwise very well done, the fan rattle is a major concern. I’m comfortable with it for my own use: on a low-end variant of a card I expect some cut corner or another, and if the fans die at some point that’s at least a cut corner I’m well-equipped to patch together a solution for. I can’t recommend this variant to anyone who can’t say the same, though.